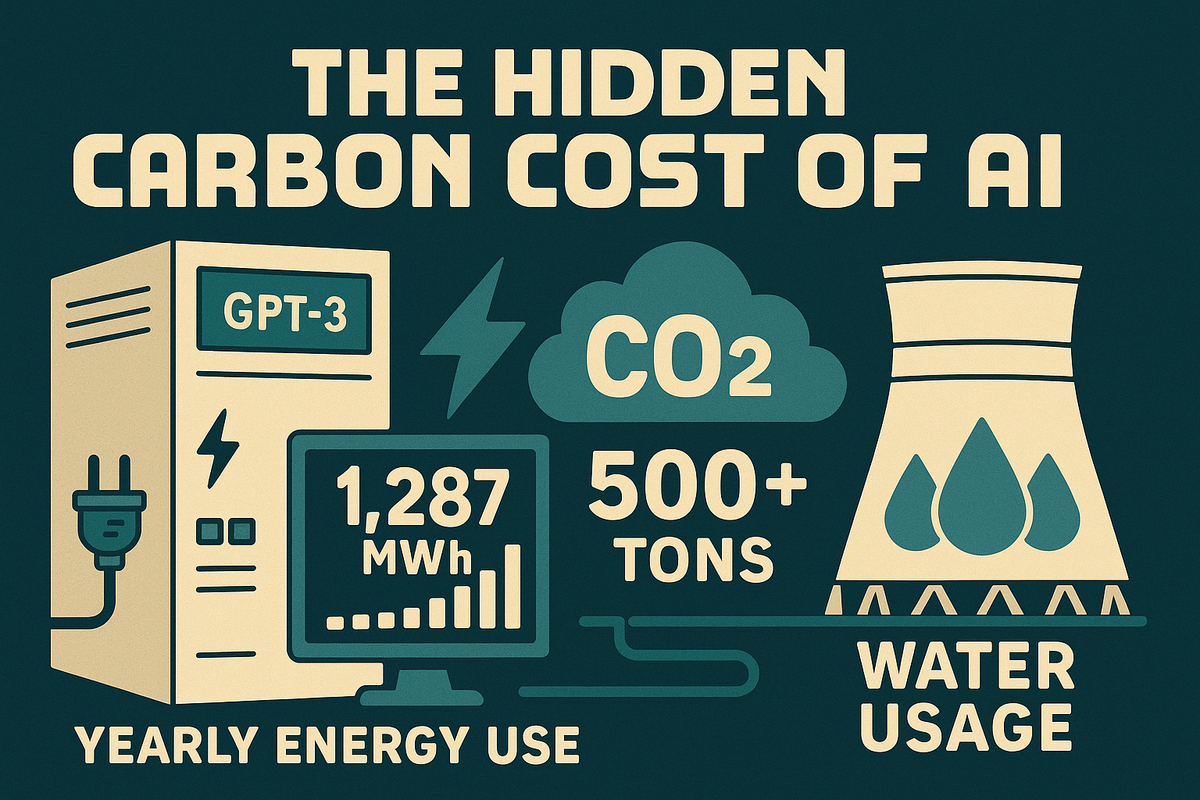

The Hidden Carbon Cost of AI: How Smart Machines Impact Our Planet

Every time you ask ChatGPT a question, generate an image, or use an AI-powered search, a vast network of servers springs into action. Training GPT-3 alone consumed about 1,287 megawatt-hours of electricity, roughly the yearly energy use of 120 U.S. homes.

Every time you ask ChatGPT a question, generate an image, or use an AI-powered search, a vast network of servers springs into action.

Those glowing chips and humming data centers consume energy... a lot of it.

AI feels weightless, but its computations are grounded in real electricity, hardware, and water use. Behind the friendly chatbot sits a carbon footprint larger than most people realize.

⚙️ Training: The Energy-Intensive Beginning

Before an AI model can chat with you, it must be trained. This means processing billions of words, images, and patterns, a task that requires massive computational power.

- Training GPT-3 alone consumed about 1,287 megawatt-hours of electricity, roughly the yearly energy use of 120 U.S. homes.

- It emitted an estimated 500+ tons of CO₂, depending on where the energy came from.

- Cooling those GPU-packed data centers takes thousands of liters of water daily.

And these are just training runs. Each new version (GPT-4, Gemini, Claude) pushes the limits even further.

💬 Think of training a large model like charging a global-scale battery — it’s a one-time cost, but a huge one.

⚡ Inference: The Everyday Footprint

Once trained, AI systems move to the “inference” stage, generating responses to user inputs.

It’s the stage we interact with daily.

- A single ChatGPT query consumes around 3–4 watt-hours, similar to leaving a 60-watt bulb on for three minutes.

- Image or video generation can use 10–50× more power.

- Multiply that by hundreds of millions of users every day, and you get an invisible but rapidly growing carbon curve.

Even simple prompts add up. Every “quick” brainstorm or rewritten email is a small spark in a vast energy network.

📈 Why It’s Growing So Fast

AI isn’t slowing down. It’s accelerating across every product we use.

Today, models don’t just answer text; they generate images, code, and even videos.

This expansion means:

- Bigger models with more parameters.

- More frequent retraining as data evolves.

- Always-on infrastructure powering 24/7 availability.

The International Energy Agency (IEA) estimates that AI data center electricity demand could rise tenfold by 2030, outpacing global efficiency improvements.

💬 AI’s intelligence curve is rising — but so is its energy curve.

🌱 The Green AI Movement

The good news? Tech companies are waking up to this challenge.

- Google, Microsoft, and Amazon Web Services have pledged carbon-free data centers by 2030.

- AI researchers are building smaller, specialized models that deliver results with a fraction of the energy.

- Some labs schedule heavy training during renewable energy peaks to reduce emissions.

- Tools like Climatiq and WattTime now help measure and offset AI-related carbon footprints in real time.

Even AI architecture itself is evolving: models are being distilled, compressed, and optimized for energy efficiency — not just accuracy.

🌿 Sustainability is becoming a new measure of innovation.

💡 What We Can Do

While users can’t control how cloud servers are powered, small habits can help:

- Prefer AI tools that publish energy-use transparency reports.

- Batch your prompts... plan your questions before running long sessions.

- Combine similar questions or tasks into one detailed query.

- Instead of typing 10 separate prompts like “Write a tweet about AI” → “Make it funnier” → “Now add emojis”,

try: “Write 3 tweet versions about the carbon impact of AI — one serious, one humorous, one with emojis.” - Use “session summaries” (e.g., “Summarize all insights we’ve discussed”) instead of reloading or restarting threads.

- For simple tasks, use local or smaller models instead of massive cloud ones. (e.g. LLaMA 3 8B)

- Support open research on efficient model architectures.

Each small choice contributes to a more responsible digital ecosystem.

🌍 The Bottom Line

AI’s potential is extraordinary, from medical breakthroughs to global productivity gains. But intelligence comes with energy. If the future of AI is to be truly smart, it must also be sustainable.

“AI can change the world — but it shouldn’t cost the planet.”

As we stand on the edge of a new technological era, balancing progress with responsibility isn’t just good ethics, it’s good design.

Stay ahead of the curve... subscribe to Tech Arcade for weekly insights on AI, innovation, and the next big thing in tech!